- Course Info

- External Sites

- Coding

- Homework

- Project

- Exams

Project Cache Design

On this page... (hide)

- 1. Overview

- 2. Cache Interface and Organization

- 3. State Machine

- 4. Direct-mapped Cache

- 4.1 Signal Interactions

- 4.2 Perfbench Testing

- 4.3 Randbench Testing

- 4.4 Synthesis

- 5. Two-way Set-associative Cache

- 5.1 Signal Interactions

- 5.2 Replacement Policy

- 5.3 Perfbench Testing

- 5.4 Randbench Testing

- 5.5 Synthesis

- 6. Instantiating Cache Modules

What to submit:

- Check the Handin Instructions page.

- Make sure you run the Verilog rules check on all the files. Not necessary to run it on your testbench.

1. Overview

Your final processor you design for this course will use both instruction and data caches. For this stage of the project, you will be designing and testing a cache to ultimately be used for your final design. You must first design and verify a direct-mapped cache before proceeding to a two-way set-associative cache.

The cache's storage as well as the memory has already been designed for you. You will be implementing the memory system controller to effectively manage the cache. All needed files are included in the original project tar file.

The following files are included in the cache directories (cache_*/).

The mem_system.v file should be the only file that you need to edit.

This semester, we do the project individually and we do not require you to integrate the cache into your processor. You only need to finish two standalone cache designs (cache_direct and cache_assoc) and pass the tracing tests. If you are interested in integrating the cache into your datapath pipeline (tough task and might take a long time!) and you are ready to spend some time attacking this task, please do so in the demo3/ folder, test it against all the tests you ran for demo2, and send us your final solutions.

| File | Description |

| cache.v | the basic cache data structure module outlined below |

| clkrst.v | standard clock reset module |

| final_memory.v | memory banks used in four_bank_mem |

| four_bank_mem.v | four banked memory |

| loadfile_*.img | memory image files |

| mem.addr | sample address trace used by perfbench |

| memc.v | used by the cache to store data |

| mem_system_hier.v | instantiates the mem_system and a clock for the testbench |

| mem_system_perfbench.v | testbench that uses supplied memory access traces |

| mem_system_randbench.v | testbench that uses random memory access patterns |

| mem_system_ref.v | reference memory design used by testbench for comparison |

| mem_system.v | the memory system: the cache and main memory are instantiated here and this is where you will need to make your changes |

| memv.v | used by cache to store valid bits |

| *.syn.v | synthesizable versions of memory |

2. Cache Interface and Organization

This figure shows the external interface to the base cache module. Each signal is described in the table below.

+-------------------+

| |

enable >------| |

index[7:0] >------| cache |

offset[2:0] >------| |

comp >------| 256 lines |-----> hit

write >------| by 4 words |-----> dirty

tag_in[4:0] >------| |-----> tag_out[4:0]

data_in[15:0] >------| |-----> data_out[15:0]

valid_in >------| |-----> valid

| |

clk >------| |

rst >------| |-----> err

createdump >------| |

+-------------------+

| Signal | In/Out | Width | Description |

| enable | In | 1 | Enable cache. Active high. If low, "write" and "comp" have no effect, and all outputs are zero. |

| index | In | 8 | The address bits used to index into the cache memory. |

| offset | In | 3 | offset[2:1] selects which word to access in the cache line. The least significant bit should be 0 for word alignment. If the least significant bit is 1, it is an error condition. |

| comp | In | 1 | Compare. When "comp"=1, the cache will compare tag_in to the tag of the selected line and indicate if a hit has occurred; the data portion of the cache is read or written but writes are suppressed if there is a miss. When "comp"=0, no compare is done and the Tag and Data portions of the cache will both be read or written. |

| write | In | 1 | Write signal. If high at the rising edge of the clock, a write is performed to the data selected by "index" and "offset", and (if "comp"=0) to the tag selected by "index". |

| tag_in | In | 5 | When "comp"=1, this field is compared against stored tags to see if a hit occurred; when "comp"=0 and "write"=1 this field is written into the tag portion of the array. |

| data_in | In | 16 | On a write, the data that is to be written to the location specified by the "index" and "offset" inputs. |

| valid_in | In | 1 | On a write when "comp"=0, the data that is to be written to valid bit at the location specified by the "index" input. |

| clk | In | 1 | Clock signal; rising edge active. |

| rst | In | 1 | Reset signal. When "rst"=1 on the rising edge of the clock, all lines are marked invalid. (The rest of the cache state is not initialized and may contain X's.) |

| createdump | In | 1 | Write contents of entire cache to memory file. Active on rising edge. |

| hit | Out | 1 | Goes high during a compare if the tag at the location specified by the "index" lines matches the "tag_in" lines. |

| dirty | Out | 1 | When this bit is read, it indicates whether this cache line has been written to. It is valid on a read cycle, and also on a compare-write cycle when hit is false. On a write with "comp"=1, the cache sets the dirty bit to 1. On a write with "comp"=0, the dirty bit is reset to 0. |

| tag_out | Out | 5 | When "write"=0, the tag selected by "index" appears on this output. (This value is needed during a writeback.) |

| data_out | Out | 16 | When "write"=0, the data selected by "index" and "offset" appears on this output. |

| valid | Out | 1 | During a read, this output indicates the state of the valid bit in the selected cache line. |

This cache module contains 256 lines. Each line contains one valid bit, one dirty bit, a 5-bit tag, and four 16-bit words:

V D Tag Word 0 Word 1 Word 2 Word 3

___________________________________________________________________________________

|___|___|_______|________________|________________|________________|________________|

|___|___|_______|________________|________________|________________|________________|

|___|___|_______|________________|________________|________________|________________|

|___|___|_______|________________|________________|________________|________________|

Index-------->|___|___|_______|________________|________________|________________|________________|

|___|___|_______|________________|________________|________________|________________|

|___|___|_______|________________|________________|________________|________________|

|___|___|_______|________________|________________|________________|________________|

2.1 Four-banked Memory Module

Four Banked Memory is a better representation of a modern memory system. It breaks the memory into multiple banks. The four-cycle, four-banked memory is broken into two Verilog modules, the top level four_bank_mem.v and single banks final_memory.v. All needed files were included in the project tar file.

final_memory.syn.v must be in the same directory as final_memory.v.

+-------------------+

| |

addr[15:0] >------| four_bank_mem |

data_in[15:0] >------| |

wr >------| 64KB |-----> data_out[15:0]

rd >------| |-----> stall

| |-----> busy[3:0]

clk >------| |-----> err

rst >------| |

createdump >------| |

+-------------------+

Timing:

| | | | | |

| addr | addr etc | read data | | new addr |

| data_in | OK to any | available | | etc. is |

| wr, rd |*diffferent*| | | OK to |

| enable | bank | | | *same* |

| | | | | bank |

<----bank busy; any new request to--->

the *same* bank will stall

This figure shows the external interface to the module. Each signal is described in the table below.

| Signal | In/Out | Width | Description |

| addr | In | 16 | Provides the address to perform an operation on. |

| data_in | In | 16 | Data to be used on a write. |

| wr | In | 1 | When wr="1", the data on DataIn will be written to Mem[Addr] four cycles after wr is asserted. |

| rd | In | 1 | When rd="1", the DataOut will show the value of Mem[Addr] two cycles after rd is asserted. |

| clk | In | 1 | Clock signal; rising edge active. |

| rst | In | 1 | Reset signal. When "rst"=1, the memory will load the data from the file "loadfile". |

| createdump | In | 1 | Write contents of memory to file. Each bank will be written to a different file, named dumpfile_[0-3]. Active on rising edge. |

| data_out | Out | 16 | Two cycles after rd="1", the data at Mem[Addr] will be shown here. |

| stall | Out | 1 | Is set to high when the operation requested at the input cannot be completed because the required bank is busy. |

| busy | Out | 4 | Shows the current status of each bank. High means the bank cannot be accessed. |

| err | Out | 1 | The error signal is raised on an unaligned access. |

This is a byte-aligned, word-addressable 16-bit wide 64K-byte memory.

- Requests may be presented every cycle. They will be directed to one of the four banks depending on the [2:1] bits of the address.

- Two requests to the same bank which are closer than cycles N and N+4 will result in the second request not happening, and a "stall" output being generated.

- Busy output reflects the current status of each individual bank.

- Concurrent read and write are not allowed.

On reset, memory loads from file "loadfile_0.img", "loadfile_1.img", "loadfile_2.img", and "loadfile_3.img". Each file supplies every fourth word. (The latest version of the assembler generates these four files.)

Format of each file:

@0 <hex data 0> <hex data 1> ...etc

If input create_dump is true on rising clock, contents of memory will be dumped to file "dumpfile_0", "dumpfile_1", etc. Each file will be a dump from location 0 up through the highest location modified by a write in that bank.

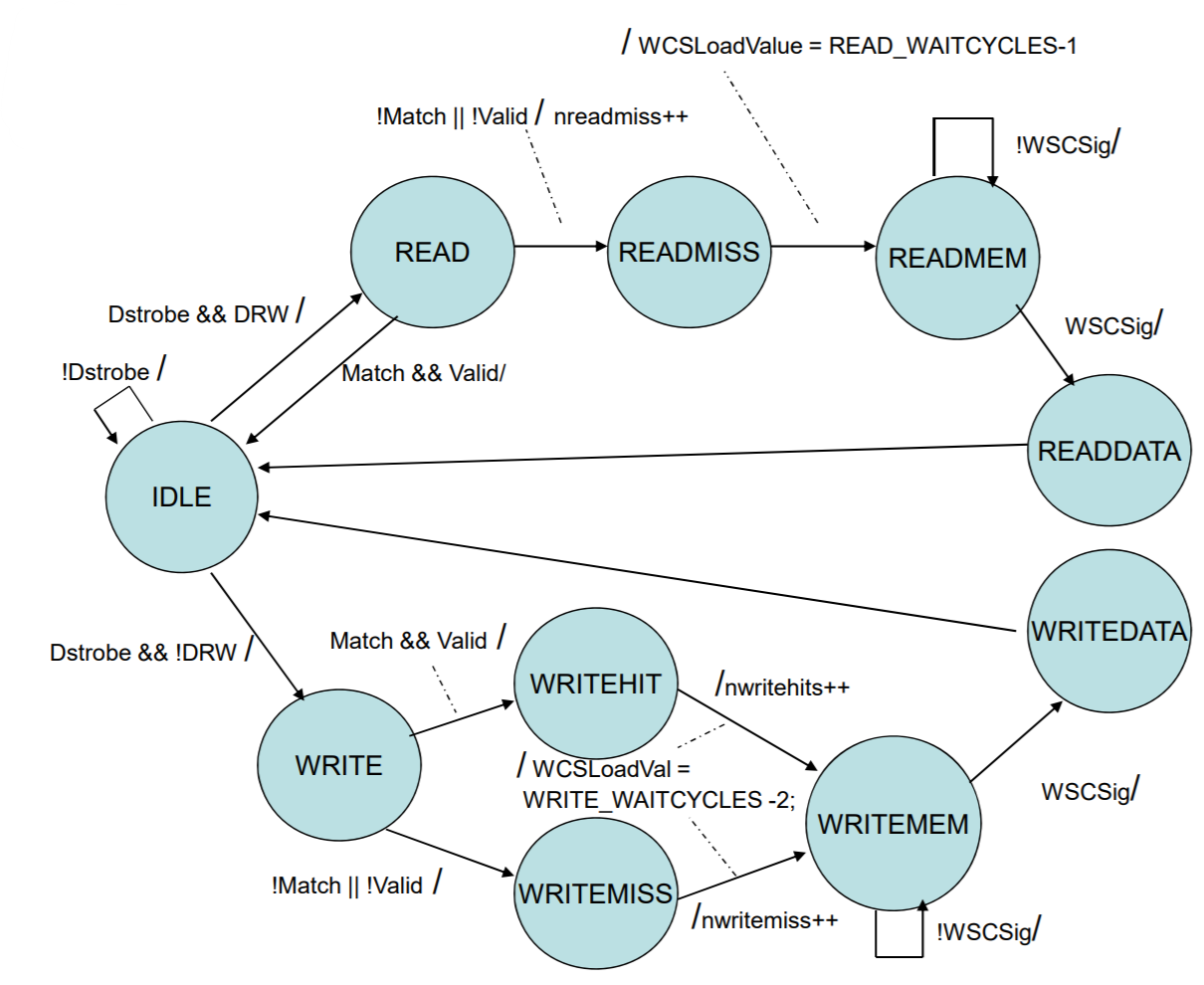

3. State Machine

Now, please take a look at the mem_system module interface.

Notes on the mem_system module:

- The system should ignore new inputs when it is stalled (not an error). Timing will be an important part of this problem; Designs that stall longer than necessary will be docked points.

- The

Donesignal should be asserted for exactly one cycle. If the request can be satisfied in the same cycle that data should be presented,Doneshould be asserted in that same cycle. - The

CacheHitsignal should indicate whether the request was a hit in the cache. Note thathit & ~validshould be treated as a miss. - The

Stalloutput should indicate whether the memory system is stalling, ignoring incoming requests. - The

memtypeparameter decides whether this is an instruction or data cache which is used to generate the names for the dump files.

You will need to determine how your cache is arranged and functions before starting implementation. Draw out the state machine for your cache controller as this will be required. You may implement either a Mealy or Moore machine though a Moore machine is recommended as it will likely be easier. Be forewarned that the resulting state machine will be relatively large so it is the best to start early.

An example cache control state machine looks like:

Original source: http://user.engineering.uiowa.edu/~hpca/lecturenotes/verilogcachelinesize2.pdf.

- This is a Mealy machine. It uses edge outputs for counting hits/misses. You do not need to do that.

- This example does not list its complete outputs. The input signals are also different from ours.

- The inputs to your control FSM are:

- The inputs to the

mem_systemmodule; - The outputs of cache

c0and main memorymem.

- The inputs to the

- The outputs of your control FSM should be:

- The inputs to cache

c0and main memorymem; - The outputs of the

mem_systemmodule.

- The inputs to cache

- This example does not make clear its promotion, eviction, write policy. Your FSM diagram should reflect these steps.

- Notice that we have exact knowledge that our four-banked main memory access takes four cycles for write and two cycles for read. Take this into account.

- We recommend drawing a Moore machine (output solely depends on the state, instead of on each edge). Please make a complete list of all cache control signal values (

{cache c0 inputs, main memory mem inputs, mem_system outputs}) on every state.

The state machine diagram is due several weeks before the cache demo.

4. Direct-mapped Cache

You will first need to implement a memory system of direct-mapped cache over four-banked main memory. Make your changes for this problem in the cache_direct/ directory.

4.1 Signal Interactions

Although there are a lot of signals for the cache, its operation is pretty simple. When "enable" is high, the two main control lines are "comp" and "write". Here are the four cases for the behavior of the direct mapped cache:

- Compare Read (comp = 1, write = 0)

- This case is used when the processor executes a load instruction. The "tag_in", "index", and "offset" signals need to be valid. Either a hit or a miss will occur, as indicated by the "hit" output during the same cycle. If a hit occurs, "data_out" will contain the data and "valid" will indicate if the data is valid. If a miss occurs, the "valid" output will indicate whether the block occupying that line of the cache is valid. The "dirty" output indicates the state of the dirty bit in the cache line.

- Compare Write (comp = 1, write = 1)

- This case occurs when the processor executes a store instruction. The "data_in", "tag_in", "index", and "offset" lines need to be valid. Either a hit or a miss will occur as indicated by the "hit" output during the same cycle. If there is a miss, the cache state will not be modified. If there is a hit, the word will be written at the rising edge of the clock, and the dirty bit of the cache line will be written to "1". (The "dirty" output is not meaningful as this is a write cycle for that bit.) NOTE: On a hit, you also need to look at the "valid" output! If there is a hit, but the line is not valid, you should treat it as a miss.

- Access Read (comp = 0, write = 0)

- This case occurs when you want to read the tag and the data out of the cache memory. You will need to do this when a cache line is victimized, to see if the cache line is dirty and to write it back to memory if necessary. With "comp"=0, the cache basically acts like a RAM. The "index" and "offset" inputs need to be valid to select what to read. The "data_out", "tag_out", "valid", and "dirty" outputs will be valid during the same cycle.

- Access Write (comp = 0, write = 1)

- This case occurs when you bring in data from memory and need to store it in the cache. The "index", "offset", "tag_in", "valid_in" and "data_in" signals need to be valid. On the rising edge of the clock, the values will be written into the specified cache line. Also, the dirty bit will be set to zero.

4.2 Perfbench Testing

To begin testing you will use address traces that you will create to target the different possible aspects of cache behavior.

The perfbench testbench uses address trace files that describe a sequence of reads and writes. You will need to write several (at least 5) address traces to test your cache and the various behavior cases that might occur. You should try to make it so that your traces highlight the various use cases that your cache might experience to be sure that they are working.

An example address trace file (mem.addr) is provided. The format of the file is the following:

- Each line represents a new request

- Each line has 4 numbers separated by a space

- The numbers are:

Wr Rd Addr Value

Once you have created your address traces this testbench can be run as such:

wsrun.pl -addr mem.addr mem_system_perfbench *.v

If it correctly runs you will get output that looks like the following:

# Using trace file mem.addr # LOG: ReQNum 1 Cycle 12 ReqCycle 3 Wr Addr 0x015c Value 0x0018 ValueRef 0x0018 HIT 0 # # LOG: ReqNum 2 Cycle 14 ReqCycle 12 Rd Addr 0x015c Value 0x0018 ValueRef 0x0018 HIT 1 # # LOG: Done all Requests: 2 Replies: 2 Cycles: 14 Hits: 1 # Test status: SUCCESS # Break at mem_system_perfbench.v line 200 # Stopped at mem_system_perfbench.v line 200

If your script stops at the "VSIM >" command line and does not terminate to your shell:

- Use

VSIM > exitto exit out; - Change

mem_system_perfbench.vline 200 from$stopto$finish.

Be aware that just because a SUCCESS message is received it does not guarantee your cache is working correctly. You should use the cache simulator to verify the correct behavior is happening. The cache simulator can be run as follows:

cachesim <associativity> <size_bytes> <block_size_bytes> <trace_file>

So for this problem you would use:

cachesim 1 2048 8 mem.addr

This will generate output like the following:

Store Miss for Address 348 Load Hit for Address 348

You should then compare this to the perfbench output to make sure they both exhibit the same behavior.

The address traces you created should be put in the 'cache_direct/verification' directory and have the '.addr' extension.

We are always using addr[15:11] as tag, addr[10:3] as index, and addr[2:0] as offset. Our index is always 8-bits in length, indicating that our cache always has 256 sets. This means our direct-mapping cache should have the capacity 2048 bytes, and our two-way set-associative cache below will have the capacity 4096 bytes.

4.3 Randbench Testing

Once you are confident that your design is working you should test it using the random testbench. The random bench does the following:

- full random: 1000 memory requests completely random

- small random: 1000 memory requests restricted to addresses 0 to 2046

- sequential addresss: 1000 memory requests restricted to address 0x08, 0x10, 0x18, 0x20, 0x28, 0x30, 0x38, 0x40

- two sets address: 1000 memory requests to test accessing two sets. Design to yield predominantly hits if run on a two-way set-associative cache.

Remember to get your perfbench tests working before attempting to debug the randbench.

You can run the random testbench simply like this:

wsrun.pl mem_system_randbench *.v

At the end of each section you will see a message showing the performance like the following:

LOG: Done two_sets_addr Requests: 4001, Cycles: 79688 Hits: 0

Notice that since we now only have a direct-mapped cache, the two_sets tests will produce 0 hits. But the results should be all correct.

This testbench will ultimately print a message saying either:

# Test status: SUCCESS

or

# Test status: FAIL

Keep in mind that it's considered a success if the correct data is returned every time but that doesn't mean your cache is necessarily working. If you have no hits or a very small number of them, something is still wrong. If you are seeing failures, try to isolate the case that is causing the issues and create a small trace that generates the same behavior to make debugging easier.

4.4 Synthesis

You will need to run synthesis on your direct mapped cache and verify that it does not produce any errors. You should turn in all of the reports generated by synthesis.

Your synthesis results should be placed in the 'cache_direct/synthesis' directory.

5. Two-way Set-associative Cache

You should not start this until you have implemented and fully verified your direct-mapped cache.

Remember to change directories to the cache_assoc/ directory before starting to make changes to your design as you will need to submit both designs. The two-way mem_system is instantiated slightly differently, so beware not to copy your previous mem_system file over and overwrite the new one.

5.1 Signal Interactions

After you have a working design using a direct-mapped cache, you will add a second cache module to make your design two-way set-associative. Here are the four cases again:

- Compare Read (comp = 1, write = 0)

- The "index" and "offset" inputs need to be driven to both cache modules. There is a hit if either hit output goes high. Use one of the hit outputs as a select for a mux between the two data outputs. If there is a miss, decide which cache module to victimize based on this logic: If one is valid, select the other one. If neither is valid, select way zero. If both are valid, use the pseudo-random replacement algorithm specified below.

- Compare Write (comp = 1, write = 1)

- The "index", "offset", and "data" inputs need to be driven to both cache modules. There is a hit if either hit output goes high. Note that only one cache will get written as long as your design ensures that no line can be present in both cache modules.

- Access Read (comp = 0, write = 0)

- After deciding which cache module to victimize, use that select bit to mux the data, valid, and dirty bits from the two cache modules.

- Access Write (comp = 0, write = 1)

- Drive the "index", "offset", "data" and "valid" inputs to both cache modules. Make sure only the correct module has its write input asserted.

5.2 Replacement Policy

In order to make the designs more deterministic and easier to grade, all set-associative caches must implement the following pseudo-random replacement algorithm:

- Have a flipflop called "victimway" which is intialized to zero.

- On each read or write of the cache, invert the state of victimway.

- When installing a line after a cache miss, install in an invalid block if possible. If both ways are invalid, install in way zero.

- If both ways are valid, and a block must be victimized, use victimway (after already being inverted for this access) to indicate which way to use.

Example, using two sets:

start with victimway = 0 load 0x1000 victimway=1; install 0x1000 in way 0 because both free load 0x1010 victimway=0; install 0x1010 in way 0 because both free load 0x1000 victimway=1; hit load 0x2010 victimway=0; install 0x2010 in way 1 because it's free load 0x2000 victimway=1; install 0x2000 in way 1 because it's free load 0x3000 victimway=0; install 0x3000 in way 0 (=victimway) load 0x3010 victimway=1; install 0x3010 in way 1 (=victimway)

5.3 Perfbench Testing

Your testing for the set-associative cache should be done in much the same way. You can either create more address traces or update your previous ones to reflect the differences in behavior the new design would have.

The cache simulator would now be run with slightly different arguments to reflect your changes:

cachesim 2 4096 8 mem.addr pseudoRandom

If you do not specify the pseudoRandom argument it will use an LRU replacement policy instead of the pseudo-random policy you have implemented.

The address traces you used should be put in the 'cache_assoc/verification' directory and have the '.addr' extention.

5.4 Randbench Testing

Now, run the randbench on your two-way set-associative cache. You must still pass all the results comparisons and get a SUCCESS message at the end.

Whats more, your two_sets test should now have hits! The number of hits may vary but should definitely be greater than 0.

LOG: Done two_sets_addr Requests: 4001, Cycles: 79688 Hits: 562

5.5 Synthesis

You will also need to synthesize your set-associative cache. You should turn in all of the reports generated by synthesis.

Your synthesis results should be placed in the 'cache_assoc/synthesis' directory.

6. Instantiating Cache Modules

If you are integrating the cache mem_system into your datapath pipeline in demo3 to replace the perfect single-cycle memory, take a look at the following.

When instantiating the module, there is a parameter which is set for each instance. When you dump the contents of the cache to a set of files (e.g. for debugging), this parameter allows each instance to go to a unique set of filenames.

Parameter Value File Names

--------------- ----------

0 Icache_0_data_0, Icache_0_data_1, Icache_0_tags, ...

1 Dcache_0_data_0, Dcache_0_data_1, Dcache_0_tags, ...

2 Icache_1_data_0, Icache_1_data_1, Icache_1_tags, ...

3 Dcache_1_data_0, Dcache_1_data_1, Dcache_1_tags, ...

Here is an example of instantiating two modules with a parameter value of 0 and 1:

cache #(0) cache0 (enable, index, ... cache #(1) cache1 (enable, index, ...